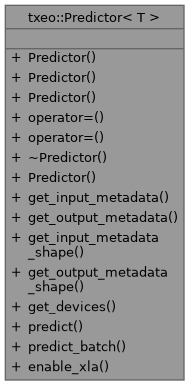

Class that deals with the main tasks of prediction (inference) More...

#include <Predictor.h>

Public Types | |

| using | TensorInfo = std::vector< std::pair< std::string, txeo::TensorShape > > |

| using | TensorIdent = std::vector< std::pair< std::string, txeo::Tensor< T > > > |

Public Member Functions | |

| Predictor ()=delete | |

| Predictor (const Predictor &)=delete | |

| Predictor (Predictor &&)=delete | |

| Predictor & | operator= (const Predictor &)=delete |

| Predictor & | operator= (Predictor &&)=delete |

| ~Predictor () | |

| Predictor (std::filesystem::path model_path, txeo::Logger &logger=txeo::LoggerConsole::instance()) | |

| Constructs a Predictor from a TensorFlow SavedModel directory. | |

| const TensorInfo & | get_input_metadata () const noexcept |

| Returns the input tensor metadata for the loaded model. | |

| const TensorInfo & | get_output_metadata () const noexcept |

| Returns the output tensor metadata for the loaded model. | |

| std::optional< txeo::TensorShape > | get_input_metadata_shape (const std::string &name) const |

| Returns shape for specific input tensor by name. | |

| std::optional< txeo::TensorShape > | get_output_metadata_shape (const std::string &name) const |

| Get shape for specific output tensor by name. | |

| std::vector< DeviceInfo > | get_devices () const |

| Returns the available compute devices. | |

| txeo::Tensor< T > | predict (const txeo::Tensor< T > &input) const |

| Perform single input/single output inference. | |

| std::vector< txeo::Tensor< T > > | predict_batch (const TensorIdent &inputs) const |

| Perform batch inference with multiple named inputs. | |

| void | enable_xla (bool enable) |

| Enable/disable XLA (Accelerated Linear Algebra) compilation. | |

Detailed Description

Class that deals with the main tasks of prediction (inference)

- Template Parameters

-

T Specifies the data type of the model involved

Definition at line 24 of file Predictor.h.

Member Typedef Documentation

◆ TensorIdent

| using txeo::Predictor< T >::TensorIdent = std::vector<std::pair<std::string, txeo::Tensor<T> >> |

Definition at line 27 of file Predictor.h.

◆ TensorInfo

| using txeo::Predictor< T >::TensorInfo = std::vector<std::pair<std::string, txeo::TensorShape> > |

Definition at line 26 of file Predictor.h.

Constructor & Destructor Documentation

◆ Predictor() [1/4]

|

explicitdelete |

◆ Predictor() [2/4]

◆ Predictor() [3/4]

◆ ~Predictor()

| txeo::Predictor< T >::~Predictor | ( | ) |

◆ Predictor() [4/4]

|

explicit |

Constructs a Predictor from a TensorFlow SavedModel directory.

The directory must contain a valid SavedModel (typically with a .pb file). For best performance, use models with frozen weights.

- Parameters

-

model_path Path to the directory of the .pb saved model

- Exceptions

-

PredictorError

- Note

- Freezing Recommendation (Python example): *import tensorflow as tf# Load SavedModelmodel = tf.saved_model.load("path/to/trained_model")frozen_func = tf.python.framework.convert_to_constants.convert_variables_to_constants_v2()tf.io.write_graph(frozen_func.graph.as_graph_def(),"path/to/frozen_model","frozen.pb",)A container for managing training, evaluation, and test data splits.Definition DataTable.h:24

Member Function Documentation

◆ enable_xla()

Enable/disable XLA (Accelerated Linear Algebra) compilation.

- Parameters

-

enable Whether to enable XLA optimizations

- Note

- Requires model reloading - prefer calling before first inference

- Example:

◆ get_devices()

| std::vector< DeviceInfo > txeo::Predictor< T >::get_devices | ( | ) | const |

Returns the available compute devices.

- Returns

- Vector of DeviceInfo structures

- Example:

- }std::vector< DeviceInfo > get_devices() constReturns the available compute devices.

◆ get_input_metadata()

|

noexcept |

Returns the input tensor metadata for the loaded model.

- Returns

- const reference to vector of (name, shape) pairs

- Example:

- std::cout << "Input: " << name << " Shape: " << shape << "\n";}const TensorInfo & get_input_metadata() const noexceptReturns the input tensor metadata for the loaded model.

◆ get_input_metadata_shape()

| std::optional< txeo::TensorShape > txeo::Predictor< T >::get_input_metadata_shape | ( | const std::string & | name | ) | const |

◆ get_output_metadata()

|

noexcept |

◆ get_output_metadata_shape()

| std::optional< txeo::TensorShape > txeo::Predictor< T >::get_output_metadata_shape | ( | const std::string & | name | ) | const |

Get shape for specific output tensor by name.

- Parameters

-

name Tensor name from model signature

- Returns

- std::optional containing shape if found

- Example:

- .value_or(txeo::TensorShape{0});The shape of a tensor is an ordered collection of dimensions of mathematical vector spaces.Definition TensorShape.h:31

◆ operator=() [1/2]

◆ operator=() [2/2]

|

delete |

◆ predict()

| txeo::Tensor< T > txeo::Predictor< T >::predict | ( | const txeo::Tensor< T > & | input | ) | const |

Perform single input/single output inference.

- Parameters

-

input Input tensor matching model's expected shape

- Returns

- Output tensor with inference results

- Exceptions

-

PredictorError

- Example:

- Tensor<float> input({2, 2}, {1.0f, 2.0f, 3.0f, 4.0f});auto output = predictor.predict(input);std::cout << "Prediction: " << output(0) << "\n";

◆ predict_batch()

| std::vector< txeo::Tensor< T > > txeo::Predictor< T >::predict_batch | ( | const TensorIdent & | inputs | ) | const |

Perform batch inference with multiple named inputs.

- Parameters

-

inputs Vector of (name, tensor) pairs

- Returns

- Vector of output tensors

- Exceptions

-

PredictorError

- Example:

- std::vector<std::pair<std::string, txeo::Tensor<float>>> inputs {{"image", image_tensor},{"metadata", meta_tensor}};auto results = predictor.predict_batch(inputs);

The documentation for this class was generated from the following files:

- include/txeo/Matrix.h

- include/txeo/Predictor.h